Until now, we’ve deployed our application on a Kubernetes cluster and manually verified that it is running. We have two options moving forward: either proceed with manual testing or create automated tests, also known as a test suite. While manual testing is the traditional approach, DevOps heavily emphasizes automating tests to integrate them into your CD pipeline. This way, we can eliminate many repetitive tasks, often called toil.

We’ve developed a Python-based integration test suite for our application, covering various scenarios. One significant advantage of this test suite is that it treats the application as a black box. It remains unaware of how the application is implemented, focusing solely on simulating end user interactions. This approach provides valuable insights into the application’s functional aspects.

Furthermore, since this is an integration test, it assesses the entire application as a cohesive unit, in contrast to the unit tests we ran in our CI pipeline, where we tested each microservice in isolation.

Without further delay, let’s integrate the integration test into our CD pipeline.

CD workflow changes

Till now, we have the following within our CD workflow:

├── create-cluster.yml

├── dev-cd-workflow.yaml

└── prod-cd-workflow.yaml

Both the Dev and Prod CD workflows contain the following jobs:

jobs:

create-environment-and-deploy-app:

name: Create Environment and Deploy App

uses: ./.github/workflows/create-cluster.yml

secrets: inherit

As we can see, the step calls the run-tests.yml workflow. That is the workflow that will be doing the integration tests. Let’s look at the workflow to understand it better:

name: Run Integration Tests

on: [workflow_call]

jobs:

test-application:

runs-on: ubuntu-latest

defaults:

run:

working-directory: ./tests

steps:

- uses: actions/checkout@v2

- name: Extract branch name

run: echo “branch=${GITHUB_HEAD_REF:-${GITHUB_REF#refs/heads/}}” >> $GITHUB_OUTPUT

id: extract_branch - id: gcloud-auth

name: Authenticate with gcloud

uses: ‘google-github-actions/auth@v1’ with:

credentials_json: ‘${{ secrets.GCP_CREDENTIALS }}’ - name: Set up Cloud SDK

id: setup-gcloud-sdk

uses: ‘google-github-actions/setup-gcloud@v1’ - name: Get kubectl credentials

id: ‘get-credentials’

uses: ‘google-github-actions/get-gke-credentials@v1’ with:

cluster_name: mdo-cluster-${{ steps.extract_branch.outputs.branch }}

location: ${{ secrets.CLUSTER_LOCATION }} - name: Compute Application URL

id: compute-application-url

run: external_ip=$(kubectl get svc -n blog-app frontend –output jsonpath='{.status. loadBalancer.ingress[0].ip}’) && echo ${external_ip} && sed -i “s/localhost/${external_ ip}/g” integration-test.py

- id: run-integration-test

name: Run Integration Test

run: python3 integration-test.py

The workflow performs the following tasks:

- It is triggered exclusively through a workflow call.

- It has the ./tests working directory.

- It checks out the committed code.

- It installs the gcloud CLI and authenticates with Google Cloud using the GCP_CREDENTIALS service account credentials.

- It connects kubectl to the Kubernetes cluster to retrieve the application URL.

- Using the application URL, it executes the integration test.

Now, let’s proceed to update the workflow and add tests using the following commands:

$ cp -r ~/modern-devops/ch13/integration-tests/.github . $ cp -r ~/modern-devops/ch13/integration-tests/tests . $ git add –all

$ git commit -m “Added tests”

$ git push

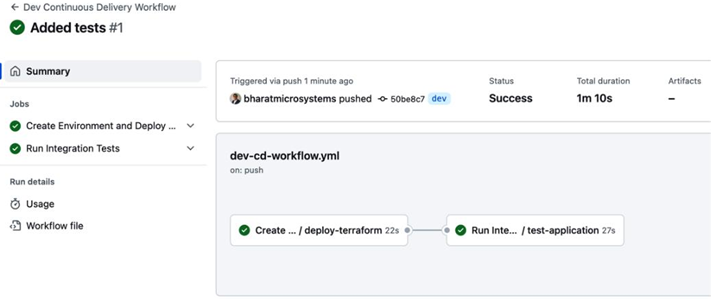

This should trigger the Dev CD GitHub Actions workflow again. You should see something like the following:

Figure 13.10 – Added tests workflow run

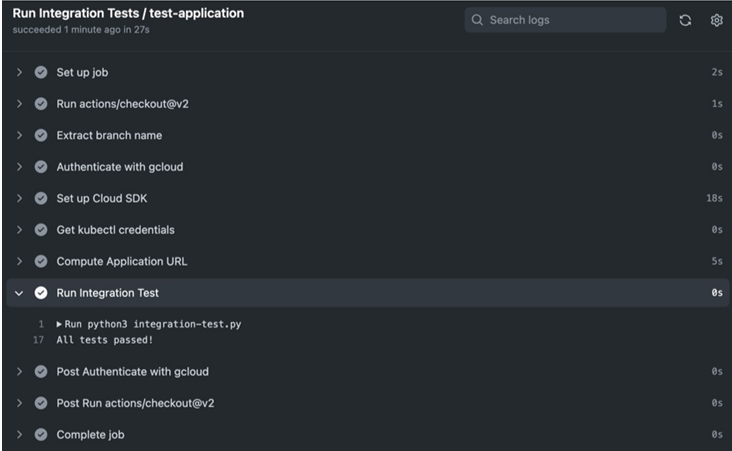

As we can see, there are two steps in our workflow, and both are now successful. To explore what was tested, you can click on the Run Integration Tests step, and it should show you the following output:

Figure 13.11 – The Run Integration Tests workflow step

As we can see, the Run Integration Tests step reports that all tests have passed.

While images are being built, deployed, and tested using your CI/CD toolchain, there is nothing in between to prevent someone from deploying an image in your Kubernetes cluster. You might be scanning all your images for vulnerabilities and mitigating them, but somewhere, someone might bypass all controls and deploy containers directly to your cluster. So, how can you prevent such a situation? The answer to that question is through binary authorization. Let’s explore this in the next section.